Assignment

4

Probabilistic Models of

Human and Machine Intelligence

CSCI 7222

Assigned

10/1/2013

Due 10/8/2013

Goal

This

assignment will give you practice in determining conditional

independence and performing exact inference.

PART I

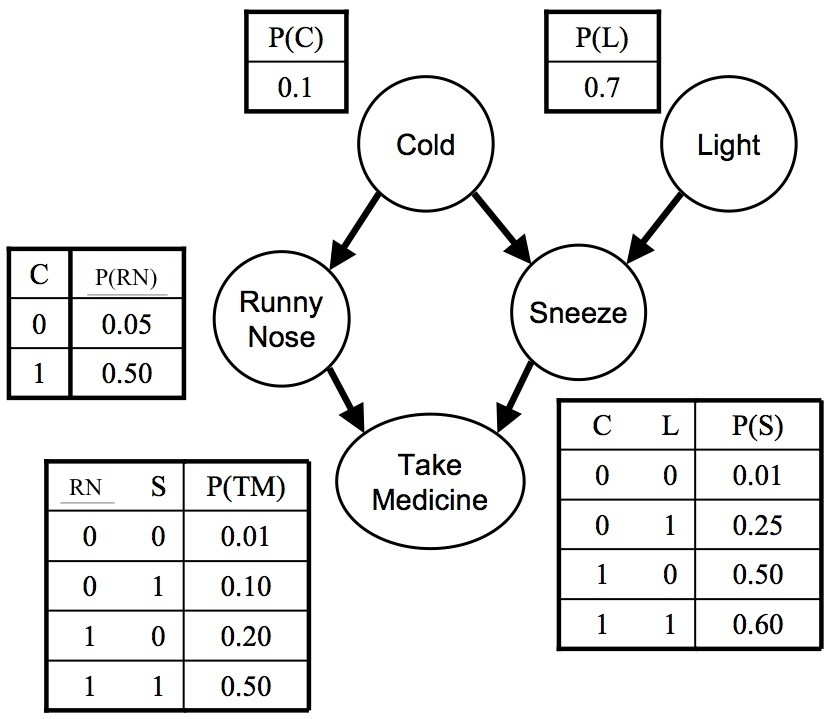

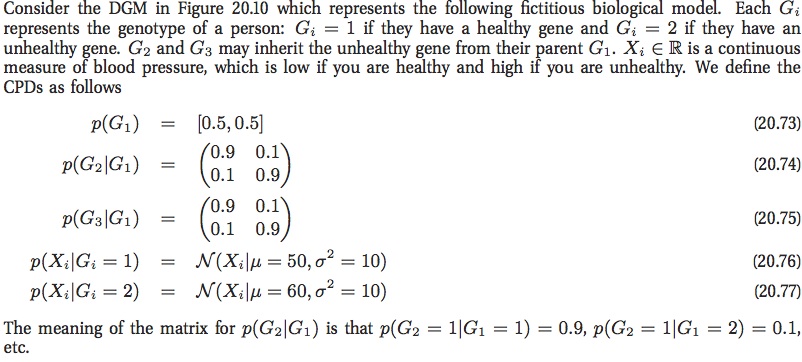

Consider

the Bayes net with 5 binary random variables. (Thank you

to Pedro Domingos of U. Washington for the example.)

1. What is the Markov blankot of Sneeze?

2. What is the Markov blanket of Take Medicine?

3. Write the expression for the joint probability, P(L, C, RN, S, TM), in terms of the conditional probability distributions.

4. Moralize the above graph to obtain an equivalent Markov net.

5. Write the joint probability function for the Markov net, with one term per maximal clique. Show the correspondence between the potential functions in this equation with the conditional probability distributions in question 3.

6. Is this graph a polytree? If not, what links might you add or remove to make it into a polytree? (A polytree is a directed graph with no undirected cycles; it is a generalization of a tree that allows for a node to have multiple parents.)

7. Which of the following are true:

(a) C ? TM | RN, S

(b) TM ? C | S

(c) C ? L

(d) C ? L | TM

(e) RN ? L | TM

(f) RN ? L

(g) RN ? L | S

(h) RN ? L | C, S

8. Compute P(C=1 | TM=1, RN=0, L=0). Note that this conditional can be expressed as the ratio of P(C=1, TM=1, RN=0, L=0) and P(TM=1, RN=0, L=0).

1. What is the Markov blankot of Sneeze?

2. What is the Markov blanket of Take Medicine?

3. Write the expression for the joint probability, P(L, C, RN, S, TM), in terms of the conditional probability distributions.

4. Moralize the above graph to obtain an equivalent Markov net.

5. Write the joint probability function for the Markov net, with one term per maximal clique. Show the correspondence between the potential functions in this equation with the conditional probability distributions in question 3.

6. Is this graph a polytree? If not, what links might you add or remove to make it into a polytree? (A polytree is a directed graph with no undirected cycles; it is a generalization of a tree that allows for a node to have multiple parents.)

7. Which of the following are true:

(a) C ? TM | RN, S

(b) TM ? C | S

(c) C ? L

(d) C ? L | TM

(e) RN ? L | TM

(f) RN ? L

(g) RN ? L | S

(h) RN ? L | C, S

8. Compute P(C=1 | TM=1, RN=0, L=0). Note that this conditional can be expressed as the ratio of P(C=1, TM=1, RN=0, L=0) and P(TM=1, RN=0, L=0).

PART II

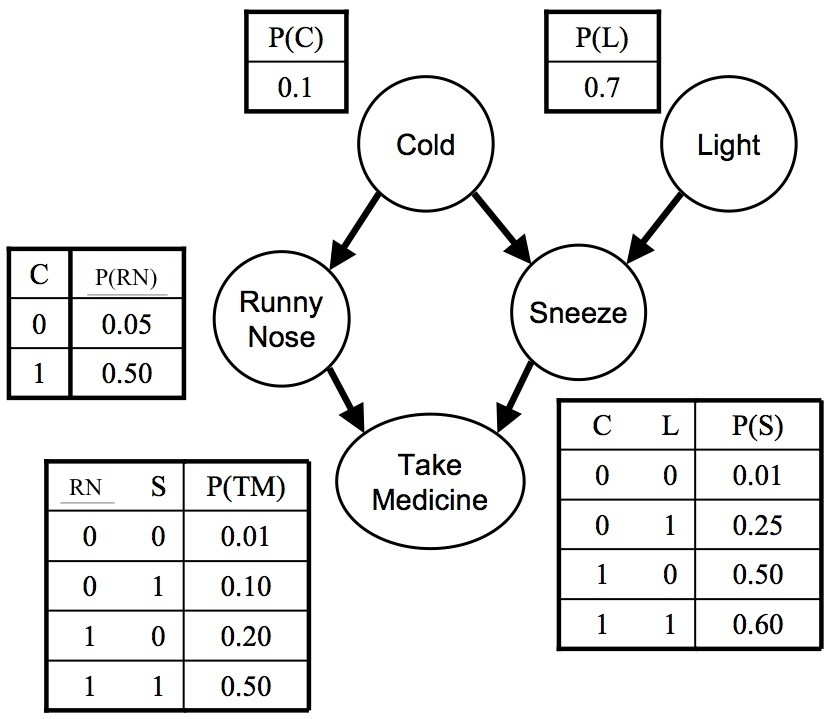

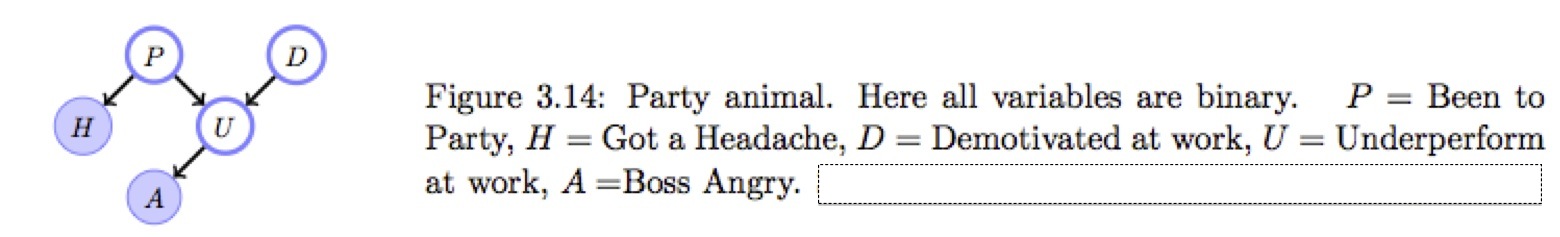

1. Write the joint distribution over A, D, U, H, and P in this graphical model (from Barber, Fig 3.14). Ignore the shading of H and A.

2. Write out an expression for P(H) as a summation over nuisance variables in a manner that would be appropriate for efficient variable elimination. (Don't do any numerical computation; simply write the expression in terms of the conditional probabilities and summations over the nuisance variables. Arrange the summations as we did in the variable elimination examples to be efficient in computing partial results.)

3. Write out a simplified expression for P(U=u | D=d) in a manner that would be appropriate for variable elimination. By 'simplified expression', I mean to reduce the expression to the simplest possible form, eliminating constants and unnecessary terms.

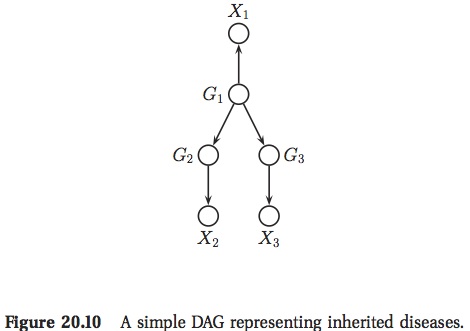

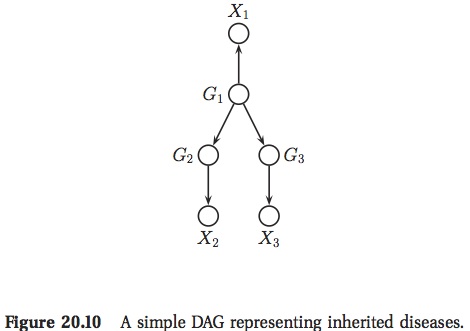

PART III

The

Bayes net below comes from Kevin Murphy's text (Exercise 20.3).

1. Compute P(G1 = 2 | X2 = 50)

2. Compute P(X3 = 50 | X2 = 50)

1. Compute P(G1 = 2 | X2 = 50)

2. Compute P(X3 = 50 | X2 = 50)