|

|

Elastic OS:

Creating an Auto-Scalable Linux for Data Center Computing

![]()

| We are developing an Elastic

operating system called 'ElasticOS' for the

cloud. The goal is to support scaling of big

data applications in the cloud without requiring them

to be rewritten to explicitly incorporate

elasticity. We are modifying the Linux kernel to

achieve this goal. We have built a version of ElasticOS in Linux and have published a technical report on it at:

We've also released ElasticOS as open

source on github. We are expanding this work into the realm of serverless computing:

Our paper describing very early work and our vision on ElasticOS was published and presented at HotOS 2013:

We have also published a tech report on a related approach for memory scaling that we call FluidMem:

FluidMem has been released as open

source on github.

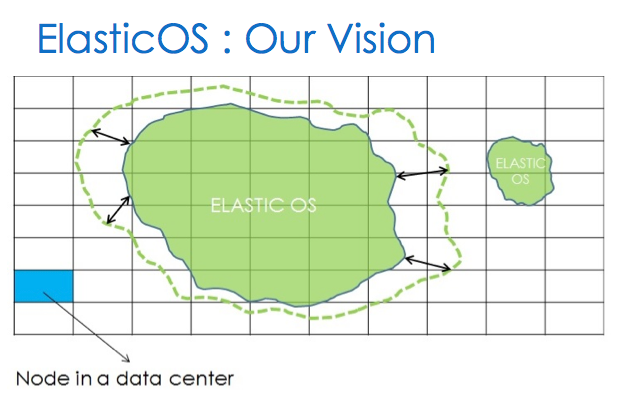

The concept of ElasticOS is shown in

the Figure. We are motivated by the trend

towards cloud computing, wherein large data centers

contain many racks of machines that are available for

rental on demand by server applications processing big

data and handling large volumes of network traffic and

I/O. Current approaches to elasticity, such as

elastic load balancers, require the application

developer to be explicitly aware of elasticity,

incorporating extra scripting logic into their

programs to spawn extra virtual machines when the load

reaches a certain threshold. Moreover, the

application developer is forced to manually

synchronize the application state between the multiple

VM instances. The concept of ElasticOS is shown in

the Figure. We are motivated by the trend

towards cloud computing, wherein large data centers

contain many racks of machines that are available for

rental on demand by server applications processing big

data and handling large volumes of network traffic and

I/O. Current approaches to elasticity, such as

elastic load balancers, require the application

developer to be explicitly aware of elasticity,

incorporating extra scripting logic into their

programs to spawn extra virtual machines when the load

reaches a certain threshold. Moreover, the

application developer is forced to manually

synchronize the application state between the multiple

VM instances.Instead, we aspire towards an approach whereby the application developer is largely unaware of whether their application server is running on one machine or a thousand machines. Application developers should focus on developing application logic and functionality, while the OS underneath the application will automatically and elastically scale to "stretch" a process or thread simultaneously across many machines. We achieve stretching of virtual memory by creating an elastic page table (see paper), wherein thread execution can jump between nodes and operate on data pages in the address space that are partitioned or sharded across different nodes. In this way, we hope to exploit locality within a thread as well as concurrency across threads in order to group or cluster data pages together on the same node that are being accessed together in time. Note that our approach is the opposite of distributed shared memory (DSM), i.e. in ElasticOS data pages are sharded across different machines and execution moves towards a single page of data, whereas in DSM data page copies move towards execution. As a result, we can see that ElasticOS is "reverse DSM" and has the useful scalability property that N writes to a data page by N threads, each on a different machine, will cause 2N context jumps to and from the machine where the data page is placed, compared to N2 consistency/coherency messages in DSM. We think this linear property will make the ElasticOS approach much more scalable. Our approach also differs from Single System Image (SSI) cluster or grid operating systems, like Kerrighed and Xtreem OS, as well as earlier efforts like MOSIX, open MOSIX and Linux PMI, which use full process migration. These approaches move a process from one machine to another to spread load, but are limited by the resources on the one machine where the process is executed, in contrast to our approach which stretches a process or thread simultaneously across multiple nodes to jointly and concurrently exploit the memory, CPU and networking of other machines. SSI approaches also tend to use some form of DSM, which suffer from the limitations noted earlier. Our vision of Elastic OS extends beyond elasticizing memory, and includes elasticizing CPU and network I/O resources. That is, just as a large in-memory database application should be able to expand and take advantage of additional memory available on other rack machines, then a network-intensive server such as a Web server should be able to take advantage of the additional NICs on other machines, and a compute-intensive job should be able to take advantage of the extra cores on other rack machines. We are currently integrating our approach towards elasticity into the Linux kernel. Among the future topics of research that we intend to explore are:

We welcome feedback and participation, and are

excited about the potential for this research

project. |