In a 1996 paper (Chaos 6:95-107), Diana Dabby (diana.dabby@olin.edu) describes a technique that uses a chaotic mapping to generate variations on a musical piece. The basic idea is to map the pitch sequence onto a chaotic trajectory; this establishes a symbolic dynamics that links the attractor geometry and the structure of the musical piece. One then generates a new chaotic trajectory and inverts the mapping to generate a new pitch sequence. Sensitive dependence on initial conditions guarantees that the variation is different from the original; the attractor structure and the symbolic dynamics guarantee that the two resemble one another in both esthetic and mathematical senses.

The program Chaographer implements a similar scheme for dance. The core of the chaotic mapping technique is the same, but many of the issues and tactics - together with much of the mathematics - are very different. The symbol set is one obvious distinction. There is a simple, well-established notational scheme for music, but body positions are much harder to represent; we use representational techniques from rigid-body mechanics to solve this problem. The mathematics of the mapping is also very different; Dabby uses a simple metric on a one-dimensional projection of the trajectory to define cells, whereas we work with a full, formal symbolic dynamics derived using computational geometry techniques. The amount of human intervention that is required is also different. In Dabby's scheme, both input and output are pitch sequences; a human expert translates these sequences to and from sound. Our variation generator takes an animation as input and generates an animation as output.

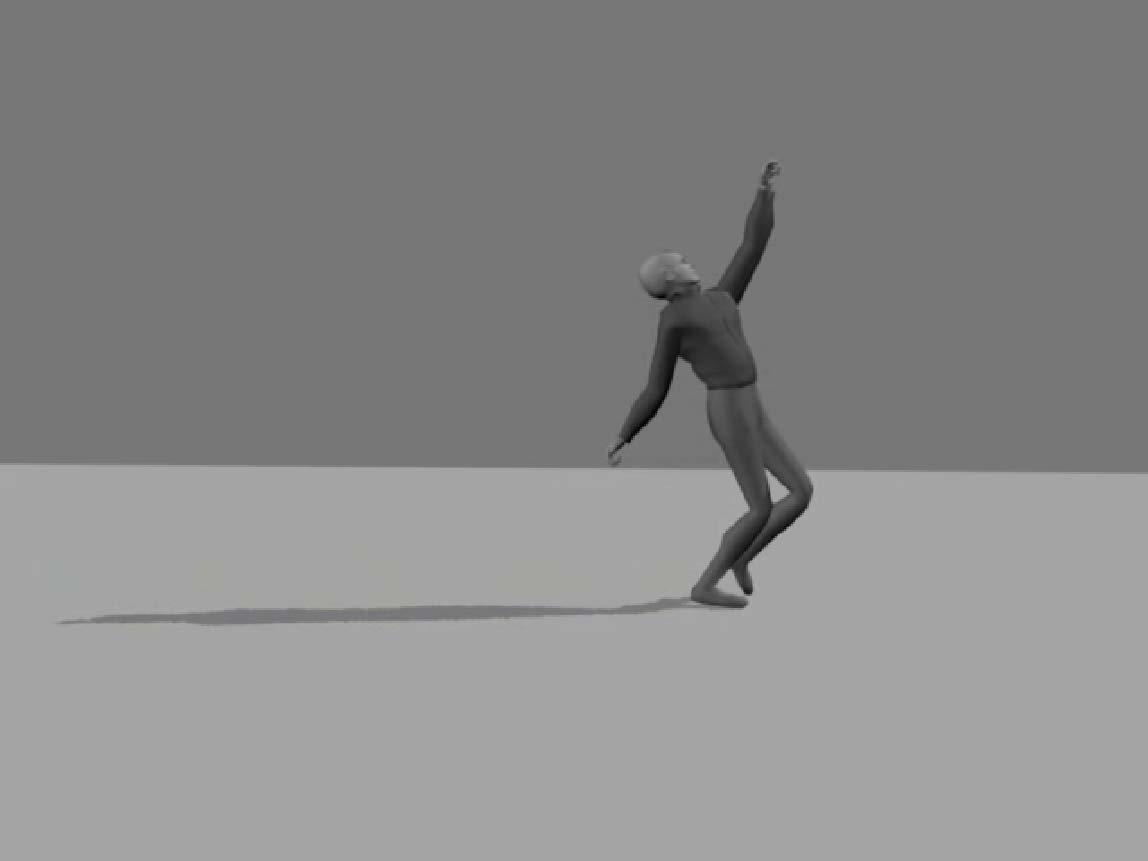

An original performance piece using six Chaographer variations and a human dancer, entitled "Con/cantation: chaotic variations," premiered in Boston in April of 2007, and has appeared since then in Boulder and Santa Fe. A still shot appears at the top of this page.

An original dance:

A chaotic variation on that piece:

Musical instruments can play arbitrary pitch sequences, but kinesiology and dance style impose a variety of constraints on consecutive body postures. To smooth any abrupt transitions introduced by the chaotic mapping, we have developed a class of corpus-based interpolation schemes that capture and enforce the dynamics of a dance genre.

The task of automatically generating stylistically consonant sequences between arbitrary body postures is quite difficult. We use a corpus of human movement to build a set of 44 weighted, directed graphs, one for each joint in the human body. Each vertex is joint position and each edge represents a transition between the two vertices that it links. Edges are weighted according to how often the corresponding transitions are observed in the corpus. Interpolating between two body positions is equivalent to finding the shortest ensemble-path through this set of graphs between the states that correspond to those positions. We use A* search to implement this, along with a special scoring function that enforces inter-joint coordination constraints (i.e., that the position of the pelvis influences what the hips are allowed to do.) These machine learning techniques are implemented in a program called MotionMind.

Chaographer and MotionMind draw on techniques from nonlinear dynamics, numerical analysis, graph theory, statistics, rigid-body mechanics, and machine learning, as well as graphics and animation.

If you're running Mac OSX and using the Safari browser, you can skip the OS/tool-specific "how to" info in the following paragraph, because Apple did it right and that combination just works.

Some of these clips are in avi format and some are in mpeg format. Your browser should understand both of these formats. On Macs and PCs, you can download them and play them using Sparkle or QuickTime. To play the mpeg files using QuickTime, you'll need the mpeg extension; see the QuickTime site to find out how to obtain the player and/or the mpeg extension if your browser doesn't handle it automatically. QuickTime is only available for Windows and Mac-OS; if you're on a Unix box, you'll need to use an mpeg viewer like mpeg_view. Click here for a page that has links to a variety of mpeg viewers for different architectures and operating systems.

Note that the performance of all movie-playing software degrades ungracefully if you're low on memory or if you have many other applications running.

The original sequences shown here were generated with the aid of a commercial human animation package called Life Forms. (Another good commercial figure animation package is Poser.)

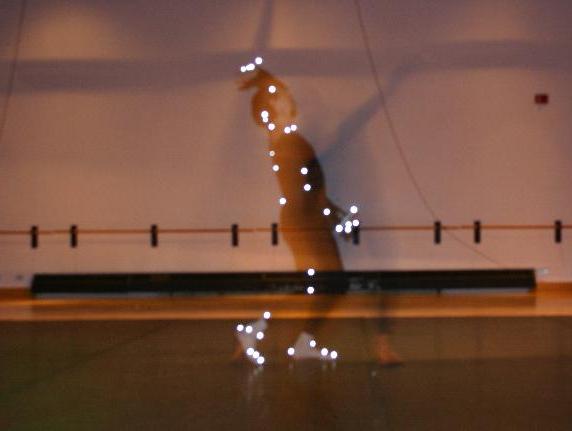

MotionMind finds stylistically consonant interpolation sequences between two positions. If it is given a corpus of ballet, for instance, and the following two poses:

|

|

(These images show a red figure on a black background, and they don't show up well on some monitors. If you have problems making out the figure, please try changing your brightness and contrast, or turning out the room lights.)

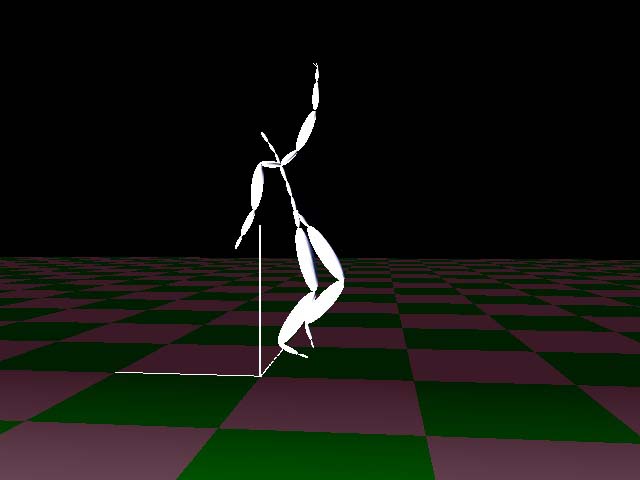

If the corpus that MotionMind uses is sparse, it may have trouble finding a path between a given pair of positions. This manifests in an interesting way: sequences that are stylistically consonant but very long. Presented with these two positions, for instance:

|

|

Coordination between joints is also an important constraint in human movement: one that, when violated, produces visibly awkward results. Click here to see an example of what happens if MotionMind is applied to a ballet corpus, but with its inter-joint coordination search heuristics disabled so it does not enforce coordination.

In order to build finite representations of these movement sequences, we had to discretize the angles of the joints. This is analogous to ``snapping'' objects to a grid in computer drawing applications, but it has some surprising effects when used to quantize human motion. The animation here shows a quantized version of a ballet adagio in blue, with the original dance superimposed upon it in red. Interestingly enough, side-by-side comparisons of the individual frames do not show up such striking differences. The human visual perception system appears to be very sensitive to small variations in joint angles in a moving figure: small changes seem to violate the "motif" of the motion.

|

|

|